Appendix Three – Program Evaluation: Student Improvement and Teaching Effectiveness

(Data Analysis and Written Report in Appendix 3 were prepared by Dr. Lixia Cheng, PLaCE Associate Director of Evaluation. Please contact clixia@purdue.edu if any question.)

The ACE-In: An In-house English Language Proficiency Test

The Assessment of College English–International (ACE-In) is an Internet-based, semi-direct test of academicEnglish proficiency for international ESL undergraduate students at Purdue University. The ACE-In was initially developed, as part of the PLaCE initiative, in Academic Year 2013–14 by Prof. Emerita April Ginther and her testing office graduate assistants in the Oral English Proficiency Program (OEPP), Purdue’s International Teaching Assistants (ITA) program. Since the establishment of the PLaCE program in Summer 2014, the ACE-In has undergone three major rounds of revisions and upgrades taken up by PLaCE testing staff, in collaboration with DelMar Software Development, LLC.

“ACE-In 2020” is a recent version of the ACE-In used thus far only in conjunction with remote proctoring by ProctorU® during the COVID-19 pandemic and, subsequently, for summer testing of “no-score” students located in various parts of the world. “ACE-In 2020,” with two comparable test forms, has three sections of independent or integrated language assessment tasks combined into one full test. Table 1 shows the structure of “ACE-In 2020,” including which language subskills are assessed in each section and what rating and scoring work is required. Compared to earlier versions of the ACE-In, “ACE-In 2020” includes one fewer section of test items, i.e., Word Deletion (also known in the research literature as Cloze-elide) to minimize exposure of test content, particularly the hardest type of ACE-In item to develop and restock

Table 1 - "ACE-In 2020" Test Structure

| Section | # of Rated Items | Languaged Skills Assessed | Rating/Scoring |

| Listen and Repeat | 12 short sentences with 15–16 syllables or 20–21 syllables in each sentence | Grammatical accuracyand meaning retention in spoken language production, listening comprehension | Blind rated by 2–3 trained lecturer-raters in PLaCE, using a rating scale |

| Oral Reading | 2 passages with 155–165 words in each passage | Oral Reading: Expression, Accuracy, and Rate (EAR) | Expression: blind rated by 2–3 trained lecturer-raters, using a rating scale; Pronunciation Accuracy: computer calculated based on human annotations by a lecturer; Rate (words per minute): computer calculated based on annotations by a lecturer or admin staff member |

| Speaking and Listening | 3 items: Express Your Opinion; Pros and Cons; Summarize a Conversation | Free response speaking, listening comprehension | Blind rated by 2–3 trained lecturer-raters in PLaCE, using an adapted Oral English Proficiency Test (OEPT) rating scale |

However, for the 56 ENGL 110 students in Fall 2021 and the 3 ENGL 110 students in Spring 2022 who requested an exemption from ENGL 111, the “ACE-In 2019” exam with eight comparable test forms and a Word Deletion section was administered in an instructional computer lab on campus. Table 6 shows the structure of “ACE-In 2019,” including which language subskills are assessed in each section and what rating and scoring work is required.

Table 2 - "ACE-In 2019" Test Structure

| Section | # of Rated Items | Languaged Skills Assessed | Rating/Scoring |

| Word Deletion | 2 passages; total 60 items | Silent reading, vocabulary, grammer | Machine-Scored |

| Listen and Repeat | 12 short sentences with 15–16 syllables or 20–21 syllables in each sentence | Grammatical accuracyand meaning retention in spoken language production, listening comprehension | Blind rated by 2–3 trained lecturer-raters in PLaCE, using a rating scale |

| Oral Reading | 2 passages with 155–165 words in each passage | Oral Reading: Expression, Accuracy, and Rate (EAR) | Expression: blind rated by 2–3 trained lecturer-raters, using a rating scale; Pronunciation Accuracy: computer calculated based on human annotations by a lecturer; Rate (words per minute): computer calculated based on annotations by a lecturer or admin staff member |

| Speaking and Listening | 3 items: Express Your Opinion; Pros and Cons; Summarize a Conversation | Free response speaking, listening comprehension | Blind rated by 2–3 trained lecturer-raters in PLaCE, using an adapted Oral English Proficiency Test (OEPT) rating scale |

The ACE-In is designed to “bias for best” test performances. No special outside content, cultural knowledge or field-specific information is required to respond to ACE-In items. Items are designed so that test-takers can formulate an adequate response just using general information that any freshman can be reasonably expected to possess. Test-takers are allowed sufficient time to prepare for and respond to ACE-In items.

For test security considerations, the ACE-In has been administered in an ITaP instructional lab on Purdue’s West Lafayette campus or via remote proctoring by ProctorU®. From Fall 2017 until before the COVID-19 pandemic, the testing arrangement for a normal academic year was that PLaCE should administer the ACE-In, twice a year, as a baseline measure and a program-exit exam to all international undergraduate students placed into the ENGL 110–111 course sequence based on their TOEFL iBT or IELTS test scores. Large-scale pre- and post-testing using the ACE-In was suspended due to challenges caused by the pandemic, including 1 the fact that in Academic Year 2020–21, most ENGL 110 and ENGL 111 students took courses online from their home country.

The ACE-In: An Intrinsic Component of PLaCE

The ACE-In has been embedded in the PLaCE program in several ways. PLaCE lecturers and testing office staff members also act as raters of the ACE-In test responses. PLaCE lecturers report that their dual roles as teachers and raters facilitate their work in both areas and give them a deeper and broader perspective of the English proficiency picture for Purdue undergraduate students than they would have if only performing one of those roles. The rater training sessions and the ACE-In rating scale development work that our lecturers were engaged in have also created a community of practice by providing venues for lecturers to share what they value as English language instructors and what their expectations are in terms of ENGL 110 and ENGL 111 students’ language development.

The ACE-In also serves as a metric for ESL proficiency, either as a snapshot of current proficiency or as measurements of language growth over time. For example, towards the end of each normal fall or spring semester, a small percentage of outstanding ENGL 110 students earns an exemption from ENGL 111 and can, therefore, proceed directly into a Written Communication course such as ENGL 106, ENGL 106 INTL, and SCLA 101. The major criteria in the evaluation of an applicant’s eligibility for an ENGL 111-exemption include, first and foremost, their ACE-In test scores, along with their ENGL 110 course performance. Thus, ACE-In test scores have been used within the PLaCE program to inform high-stakes decisions includingwhich ENGL 110 students demonstrate well-developed, balanced language proficiency to be exempted from ENGL 111. Additionally, in normal academic years, PLaCE administrators have used ACE-In scores as pre-post measures of students’ longitudinal development of language proficiency and as an important source of information to collect for the purpose of evaluating program effectiveness in the ENGL 110–111 course sequence. ENGL 110 and 111 Students’ Language Improvement Demonstrated in Vocabulary Size Test Score Gains

Even though the Fall 2021 cohort did not take the ACE-In pre- and post-testing due to some aftermaths of the COVID-19 pandemic (e.g., social distancing requirements for in-lab ACE-In administration, strict quarantine and isolation policies), data was collected on these international undergraduate students’ pre and post-test performances on a free, publicly available internet-based Vocabulary Size Test (VST). Detailed information about this vocabulary test is accessible here: https://my.vocabularysize.com/.

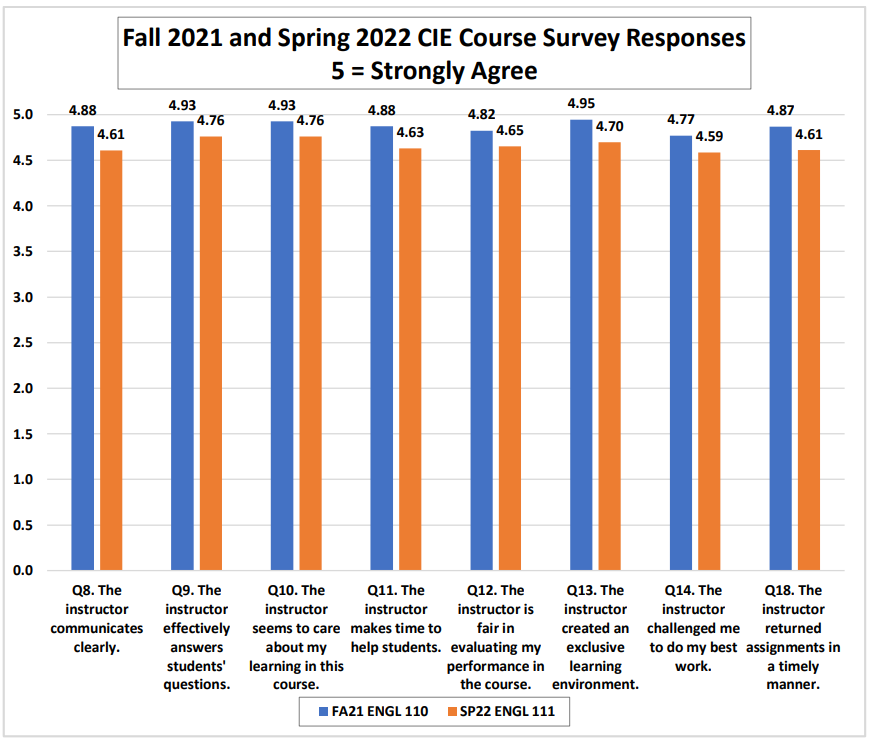

Table 3 lists the descriptive statistics on the primary metric provided by the VST about ESL vocabulary knowledge, i.e., the number of word families. Data presented is the Fall 2021 cohort’s pretest completed from August 21 until September 5, 2021, and their post-test about seven months later from March 29 until April 19, 2022, both given as homework. Table 3 and Figure 1 both indicate that the students in the ENGL 110–111 course sequence increased vocabulary size during their PLaCE journey in AY 2021–2022. The vocabulary size increase was confirmed by a significant non-parametric statistical test, given non-normality 20of the Word Families variable in both the pre- and post-test (Wilcoxon signed-rank test: Z = 2.15, p = .031; effect size: r = 0.16—small effect)

Table 3. Descriptive Statistics on VST Pretest and End-of-2nd Semester Test Results for FA21 Cohort (N=181)

| Variable | N | Mean | Std Dev | Median | Min | Max | 95% Confidence Interval for Mean |

| Pretest Word Families | 181 | 9811 | 1794 | 9700 | 5400 | 13200 | [9548, 10074] |

| Post-test Word Families at end of 2nd Semster | 181 | 10036 | 1864 | 10100 | 4800 | 13400 | [9763, 10310] |

Figure 1. FA21 Cohort's Vocabulary Size Growth Shown in VST Pretest and End-of 2nd Semester Test Results

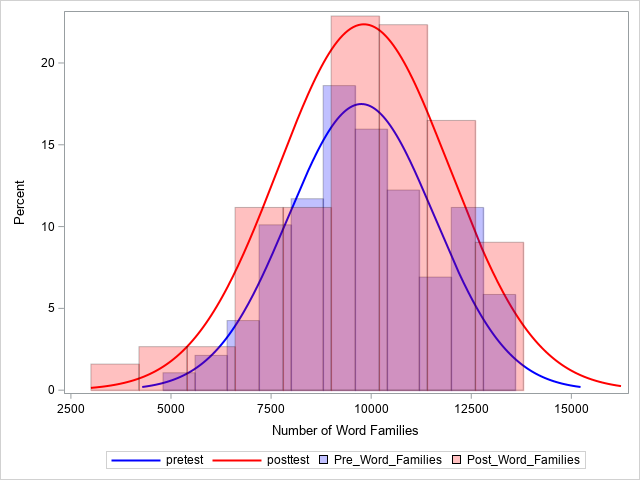

PLaCE Instructors’ Teaching Effectiveness: Evidence from CIE ENGL 110 and 111 Course Evaluations

In Academic Year 2021–22, PLaCE students’ evaluations of ENGL 110 and ENGL 111, collected by Purdue’s Center for Instructional Excellence (CIE) at the end of each semester, again presented strong evidence of students’ positive opinions about their instructor and the course delivery in ENGL 110 and ENGL 111. Across2128 ENGL 110 sections in FA2021, 256 of 372 students completed the CIE survey at the end of the Fall 2021semester (Response rate: 68.8%). Across 23 sections of ENGL 111 in SP2022, 202 of 292 students completed the CIE course survey (Response rate: 69.2%).

Figure 2 summarizes FA2021 ENGL 110 and SP2022 ENGL 111 students’ average ratings on the eight CIE survey questions about their course instructor. The range of ratings in FA2021 was from 4.77 to 4.95 with an item rating of 5.0 meaning strong agreement with the statement. The SP2022 end-of-semester surveys on ENGL 111 registered slightly lower item ratings, with overall still strong group stats ranging from 4.59 to 4.76.

The SU2022 Think Summer section of ENGL 111 and Summer Start section of ENGL 110 are not included in this report, due to low enrollments in these summer courses.

Figure 2 - FA20 and SP21 ENGL 110/111 Students’ Responses to CIE End-of-Semester Course Survey